Hello 2019! Time to get back on track. I have been playing around with Google's Data Studio for a few months now and have been having fun. Limitations do exist but they get the job done.

I found a dataset that contained the number of public school enrollments in the Philippines, cleaned the dataset using pandas library and used Data Studio for the visualization. I looked around the interwebs for latitude and longitude of the different cities and provinces around the Philippines and merged it with the original dataset. I leveraged the Geo map feature of Data Studio to plot the different points.

You may visit my dataviz project and tell me what you think. datasets and source code be found here.

deng's mighty adventures in python

Here you will find Deng's discoveries and misfortunes in Python.

Thursday, January 3, 2019

Tuesday, May 8, 2018

Get intersection between 2 columns using Pandas

I came across a task wherein I had to work on ticket logs extracted as an excel file. Each ticket field was assigned a column. Ticket number, service name, summary and work log are some of the column names. The specific task is to check if engineer names appeared on the work log column. I was able to do this in excel by creating an array with the engineer names and created a new column with the formula:

It did the trick. It shows true if the name appeared else, it would yield false.

However...I wanted to do more. I wanted to output which names appeared in the work log instead of just true or false. I got crazy in googling the correct formula, so I tried to use the Pandas library to find the intersection.

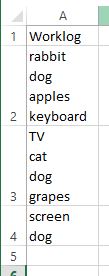

Here is a sample file that I used. I called it 'Book1.xlsx'. Column A containts the Worklog with random strings.

The names in this case would be "dog" and "cat". The task would be to check if "dog" and "cat" appeared in the "Worklog" column.

To do this, import pandas and initiate the names. In this case, I used "uniqueNames" for "dog" and "cat". The converted the string to a list.

The file is opened and assigned to the variable df.

Processing the columns:

Here is the output of df. This is the same as the output of the excel formula.

Now....to do more.

And here is the output for for the final dataframe (finalDf)

These are the required information:

- Worklog contains the original strings.

- Intersection Y/N performed the excel formula mentioned earlier.

- intersect showed which texts appeared in the Worklog

Finally, the output may be saved to excel by using. I hope this helps the interwebs!

Friday, June 16, 2017

Seismic Events for Q1 2017

Plot it here, there and everywhere...

My friend showed me the Phivolcs' data set on seismic activities and asked if I can interpret the data. I reviewed it and figured it would be great to add to my portfolio. At the same time, my Coursera assignment's due was almost up. We were tasked to pull data from the web and provide interpretation and data visualization. Sort of putting everything we learned all together...being an independent data scientist. I decided to use the Phivolcs data for the assignment. Hitting 2 birds with one stone...heh!

I started to work on the 2014 data since it showed all the data in 1 page. I used BeautifulSoup to scrape the data and I almost went insane after a week of working on the HTML file. I did not get the format that I needed.

So... To make things easier, I just used the 2017 data since the HTML tables were well formatted. I extracted a 3-month data sample of seismic activities from Phivolcs and converted the data to dataframes using Pandas. I used Matplotlib to plot the earthquake magnitudes and leveraged the Basemap toolkit to draw the map and plot the magnitudes in their respective longitude and latitude coordinates. Ha!

The complete python code may be found here. If you interested with the source file, I have extracted the raw logs as and saved as .csv here.

Here is a breakdown of my source code

Import the essential libraries needed. I am running Python 3.5 over Windows 8.1. As far as I can remember, I updated matplotlib to get the basemap library. I ran the command pip install matplotlib update under the administrator account to initiate the task. You may refer here for the documentation on basemap.

The Q1 data was extracted from Phivolcs' site. The monthly data were encoded on separate pages and were extracted separately. The read_HTML function would have an output of list of dataframes. After inspection, the needed dataframes were on the 3rd index and were extracted respectively. The 3 dataframes were then concatenated and named as df. The dataframe columns were renamed for proper labeling. Saving the file is only optional. I used to_csv to export the file.

Finally! Time to draw! Basemap is pretty easy to follow. (Not!) I used the Transverse Mercator Projection to have a realistic map.I also used the colorbar to aid in the data representation.

This tutorial helped me a lot in the visualization using Basemap. I hope it can help you too.

And....the output!

Labels:

basemap,

BeautifulSoup,

matplotlib,

pandas,

python

Thursday, May 4, 2017

Plotting Temperatures

I recently completed the 2nd assignment in the Coursera course. I had so much fun and had to post it on my blog! Ha!

We were tasked to analyze an 11-year data set which contains the maximum and minimum temperatures for everyday from 2005 to 2015. 2005 to 2014 data was sorted and 2015 data was set aside. The maximum and minimum temperatures per day were plotted. Feb 29 data was removed in the data to keep it clean. Finally, 2015 data was processed and temperature outliers were highlighted.

The following libraries were used.

The data set came from comes from a subset of The National Centers for Environmental Information (NCEI) Daily Global Historical Climatology Network (GHCN-Daily). The GHCN-Daily is comprised of daily climate records from thousands of land surface stations across the globe.

Additional columns were added to df for further processing.

Created more dataframes! More fun! At this stage, the maximum and minimum temperatures per day were identified. For example, all temps for November 24 of 2005 to 2014 were collected and the highest (or lowest) temperature was selected and placed to the new dataframe.

Plotting! I no matplotlib expert (yet) but thanks to stackoverflow, I survived. Yey! I did try to minimize the noise to put more emphasis on the data requirement.

Finally, here is the output.

The complete code may be found here.

TrulyRichClub.com - Do You Want to Gain Financial Wealth and Spiritual Abundance at the Same Time?

We were tasked to analyze an 11-year data set which contains the maximum and minimum temperatures for everyday from 2005 to 2015. 2005 to 2014 data was sorted and 2015 data was set aside. The maximum and minimum temperatures per day were plotted. Feb 29 data was removed in the data to keep it clean. Finally, 2015 data was processed and temperature outliers were highlighted.

The following libraries were used.

The data set came from comes from a subset of The National Centers for Environmental Information (NCEI) Daily Global Historical Climatology Network (GHCN-Daily). The GHCN-Daily is comprised of daily climate records from thousands of land surface stations across the globe.

- The original dataframe has 165085 rows and 4 columns and was assigned to the variable df.

- The 'Data_Value' column is in tenths of degrees C.

Additional columns were added to df for further processing.

- February 29 from leap years were also removed at this stage.

- 'temp_in_C' was used to convert 'Data_Value' to Celcius. Spyder was able to process the /10 operation but Jupyter kept on crashing. One of the mentors advised that the division operation consumes alot of memory thus causing Jupyter notebook to crash. I opted to use *.10 instead.

Created more dataframes! More fun! At this stage, the maximum and minimum temperatures per day were identified. For example, all temps for November 24 of 2005 to 2014 were collected and the highest (or lowest) temperature was selected and placed to the new dataframe.

- I felt comfortable using pivot_table but groupby function may be used as well.

- 2015 was excluded in the dataframe. We needed to identify which 2015 temperature exceeded the 2005 to 2014 data.

Plotting! I no matplotlib expert (yet) but thanks to stackoverflow, I survived. Yey! I did try to minimize the noise to put more emphasis on the data requirement.

Finally, here is the output.

The complete code may be found here.

TrulyRichClub.com - Do You Want to Gain Financial Wealth and Spiritual Abundance at the Same Time?

Labels:

data science,

jupyter,

matplotlib,

numpy,

pandas,

python,

spyder

Friday, April 7, 2017

Let's Bot In!

I was trying out the steps on building a slack bot and I would like to share my notes with you guys.

Here are some takeaways on this blog:

1. How to set the base Python Version in your virtual environment.

2. How to switch to the created virtual environments.

3. How to export environment variables in windows.

Let's start!

I am running a Windows 8.1 machine with both python 2.7 and 3.5 installed in their respective folders.

c:\Python27

c:\Python35

Part of the process is to create a virtual environment to have an independent library structure and avoid conflicts. I created a virtual environment via PowerShell and used python 3.5 as the base. Here's a guide on how to create virualenv on Windows with PowerShell.

To run PowerShell in Windows, go to the command prompt and run:

C:\>powershell

By default, my machine is runnning Python 2.7. To set the base Python version of the virtual environment to 3.5, perform the steps below:

Go to your virtual environmnt directory:

Set the base Python version:

To work on or switch to other virtual environments, use the command:

One of the challenges I had was how to export the secret tokens as environment variables.

The line below does not work for me.

The syntax is different for windows. After searching the interwebs, this article helped me out. I replaced the line instead with the script below and I was able to extract the bot ID.:

That's about it! I just followed the steps and was able to deploy a slack bot! Developing the bot brains is a different story, but hey! I got it working... hohohoho..

Friday, March 31, 2017

Code used for the Grab Challenge 2017

Yey! we did not win!

Let me share our scripts for the submission on: The DataSeer Grab Challenge 2017

First, let us import the needed libraries.

Then process the data set using pandas. First, read_csv to open the file.We used the column "created_at_local" to derive the "date", "time" and "day of week" columns using the datetime library.

Here is a sample of the extracted dataframe. The dataframe has 265073 rows and 13 columns.

The "city" and "city_only" dataframes were created. We'll keep "city" for later (see Fig 3).

Findings on Pickup Distance:

Fig 1: This is a summary of 2013 trips showing the average pickup distance (in Km.) per city. The data shows us that a trip gets cancelled if average pickup point is 1.8Km away and would be completed below the 1.8Km mark.

Findings on AAR:

Fig 2: This graph shows the Actual Allocation Rate (AAR) per city for 2013. AAR is below 50% for all cities.

Recommendation on AAR:

Fig 3: If sources are considered for the AAR data, it can be seen that VNU for Metro Manila has the highest AAR which is above 50%. We have utilized the "city" dataframe here. *It might be a good idea to promote VNU in other cities.

Findings on the Relationship of Trip State with Time:

Fig 4: This graph shows the number of trip status per hour in Cebu. Cebu had a high unallocated rate from 5pm to 7pm.

Fig 5: This graph shows the number of trip status per hour in Davao. It is seen that Davao had a high unallocated rate at around 5pm and started to go down by 8pm.

Fig 6: This graph shows the number of trip status per hour in Metro Manila. Metro Manila had an initial spike on unallocated trips which started on the early commute hour of 5am and dropped at around 10 am and another spike which started to rise at 3pm and went down by 9pm. Highest peak at around 6pm.

Fig 7: Cebu had high travel count during Monday, Friday and Saturday. The daily dataframe shows the trip status and fare per day sorted per city. This also shows the daily daily pickup distance and corresponding trip status.

Fig 8: Davao peaked its travel count every Thursday.

Fig 9: Metro Manila's Peak is Friday.

We hope you have picked up a thing or two...Your comments are very welcome!

...pardon on the html boxes...lol

Let me share our scripts for the submission on: The DataSeer Grab Challenge 2017

First, let us import the needed libraries.

Then process the data set using pandas. First, read_csv to open the file.We used the column "created_at_local" to derive the "date", "time" and "day of week" columns using the datetime library.

Here is a sample of the extracted dataframe. The dataframe has 265073 rows and 13 columns.

The "city" and "city_only" dataframes were created. We'll keep "city" for later (see Fig 3).

Findings on Pickup Distance:

Fig 1: This is a summary of 2013 trips showing the average pickup distance (in Km.) per city. The data shows us that a trip gets cancelled if average pickup point is 1.8Km away and would be completed below the 1.8Km mark.

|

| Fig 1: ax1 |

Fig 2: This graph shows the Actual Allocation Rate (AAR) per city for 2013. AAR is below 50% for all cities.

|

| Fig 2: ax2 |

Fig 3: If sources are considered for the AAR data, it can be seen that VNU for Metro Manila has the highest AAR which is above 50%. We have utilized the "city" dataframe here. *It might be a good idea to promote VNU in other cities.

|

| Fig 3: ax3 |

Fig 4: This graph shows the number of trip status per hour in Cebu. Cebu had a high unallocated rate from 5pm to 7pm.

|

| Fig 4: ax4 |

Fig 5: This graph shows the number of trip status per hour in Davao. It is seen that Davao had a high unallocated rate at around 5pm and started to go down by 8pm.

|

| Fig 5: ax5 |

|

| Fig 6: ax6 |

Fig 7: Cebu had high travel count during Monday, Friday and Saturday. The daily dataframe shows the trip status and fare per day sorted per city. This also shows the daily daily pickup distance and corresponding trip status.

|

| Fig 7: ax7 |

Fig 8: Davao peaked its travel count every Thursday.

|

| Fig 8: ax8 |

Fig 9: Metro Manila's Peak is Friday.

|

| Fig 9: ax9 |

...pardon on the html boxes...lol

Tuesday, March 21, 2017

We have declared war with Pie Graphs!

I signed up at meetup.com to check on upcoming IT conferences. Initially, I was interested in topics such as DevOps, AWS and Cloud Operations. I scanned thru the topics and well...who am I kidding? I won't be able to catch up with the folks there. I am way behind in Operations Technology! Ha!

I did mention before that I started a "journey" (ugh) in Python which led to Studying Data Science. So...I searched for topics related to both and found a couple...ok..Just 1. DataSeer hosted an event last January 19, 2017 entitled "The Art of Data Story Telling" and this is where we declared war with Pie Graphs! Argh!

During the first few minutes of the talk, I got my big take away...it says, "Your data presentation should communicate the Big Take Away clearly" Ha!

Edward Tufte's concepts were also discussed. It mainly revolved on a minimalist approach in data presentation. Always mind the Data Ink Ratio. Avoid bad visualization, resist 3D! Additional ink only distracts. Above all else, show the data.

The minimalist approach really made sense...Until I came across the work of Nigel Holmes. "Nigel Holmes, whose work regularly incorporates strong visual imagery into the fabric of the chart" (I got this some where and I guess it would be safe to quote it...lol)... OK...I'm sleepy now, you can read more of Nigel Holmes here.

I did mention before that I started a "journey" (ugh) in Python which led to Studying Data Science. So...I searched for topics related to both and found a couple...ok..Just 1. DataSeer hosted an event last January 19, 2017 entitled "The Art of Data Story Telling" and this is where we declared war with Pie Graphs! Argh!

During the first few minutes of the talk, I got my big take away...it says, "Your data presentation should communicate the Big Take Away clearly" Ha!

Edward Tufte's concepts were also discussed. It mainly revolved on a minimalist approach in data presentation. Always mind the Data Ink Ratio. Avoid bad visualization, resist 3D! Additional ink only distracts. Above all else, show the data.

The minimalist approach really made sense...Until I came across the work of Nigel Holmes. "Nigel Holmes, whose work regularly incorporates strong visual imagery into the fabric of the chart" (I got this some where and I guess it would be safe to quote it...lol)... OK...I'm sleepy now, you can read more of Nigel Holmes here.

Subscribe to:

Comments (Atom)